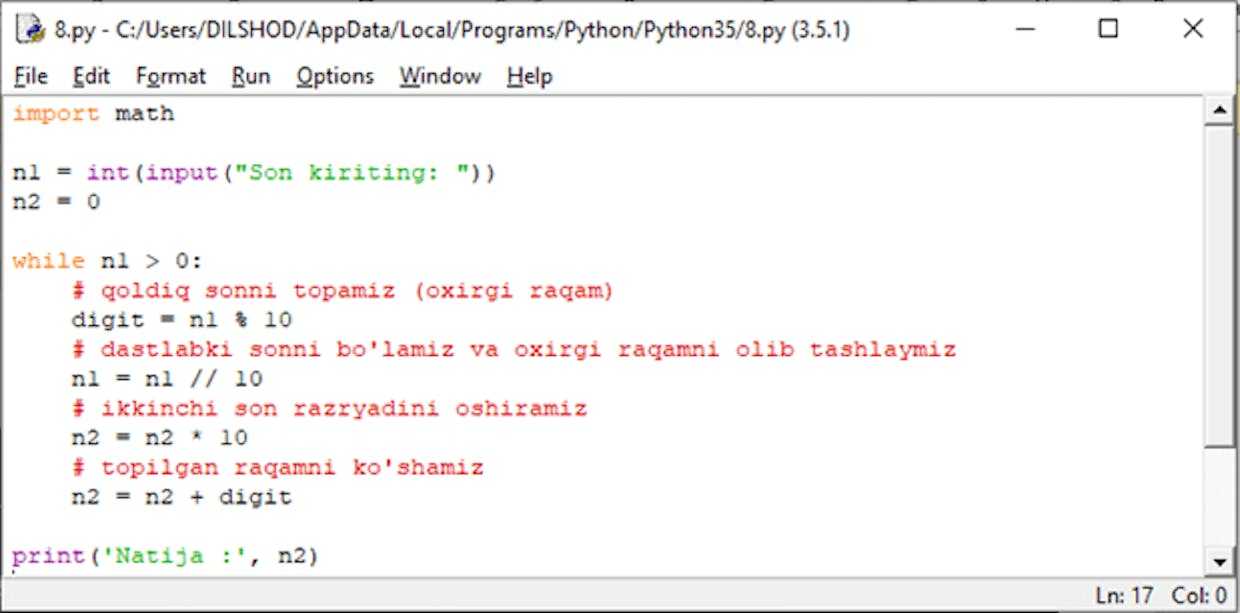

Sonning raqamlarini teskarisiga yozish.

Foydalanuvchi bir necha xonali son kiritganda, uning raqamlarini teskariga yozish dasturini tuzish lozim. Misol uchun 3658 sonini 8563 ko’rinishida yozish. Buning uchun quyidagi algoritmni ishlab chiqamiz.

1. Sonni 10 ga bo’lgandagi qoldiqni topib olamiz. Bu oxirgi raqam bo’ladi.

2. Bu raqamni yangi raqamga ko’shamiz.

3. Dastlabki sonni 10 ga bo’lamiz. Bu bilan oxirgi raqamdan olib tashlaymiz.

4. Dastlabki sondan qolgan sonni 10 ga bo’lamiz.

5. Keyingi sonni 10 ga ko’paytiramiz. Shunday qilib, biz uning razriyadini ikkitaga oshiramiz va birinchi raqam razriyadini 10 xonalikka suramiz.

6. Oldingi yodda qolgan sonni birinchi sondan olingan ikkinchi songa qo’shamiz.

7. Bu xolatni dastlabki son 0 dan kichik bo’lguniga qadar davom ettiramiz.

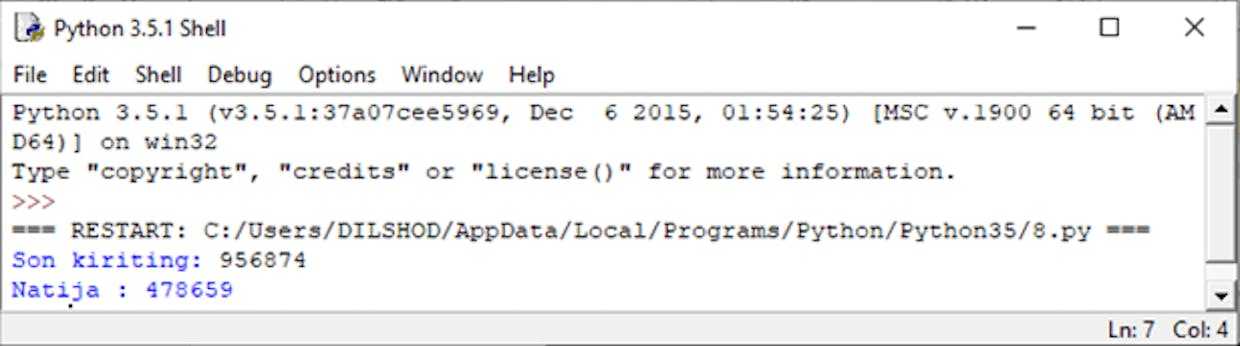

Natija:

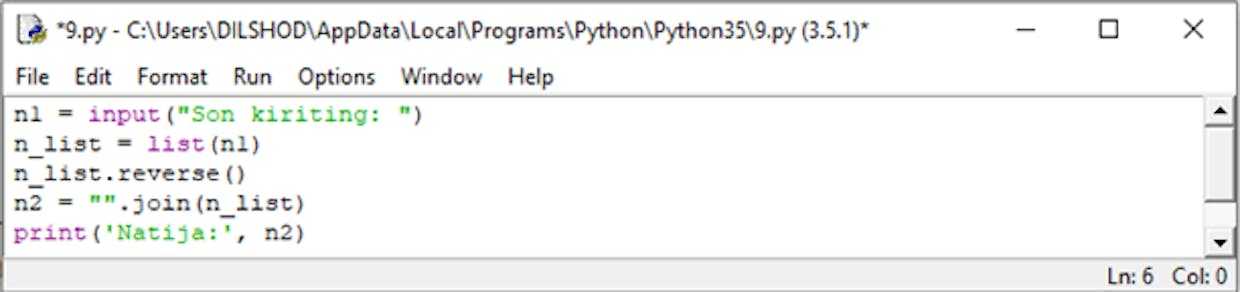

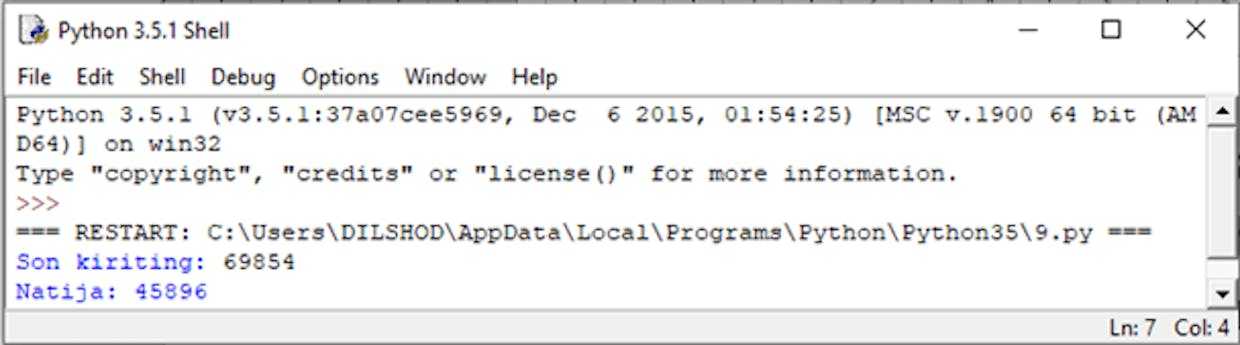

Masalani bunday algaritm bilan hal qilish barcha dasturlash tillari uchun mos tushadi. Python esa bunday hollar uchun reverse() metodini ishlab chiqqan. Bu metod berilganlarni teskari tartibda yozish imkonini beradi. join() satr metodi bilan esa barchasi bir satrga birlashtiriladi.

Natija:

6. Warren Buffett

- Age: 92

- Residence: Nebraska

- CEO: Berkshire Hathaway (BRK.A)

- Net Worth: $103 billion

- Berkshire Hathaway Ownership Stake: 16% ($102 billion)

- Other Assets: $1.05 billion in cash

The most famous living value investor, Warren Buffett filed his first tax return in 1944 at age 14, declaring earnings from his boyhood paper route. He first bought shares in a textile company called Berkshire Hathaway in 1962, becoming the majority shareholder by 1965. Buffett expanded the company’s holdings to insurance and other investments in 1967.

Berkshire Hathaway is now a $657 billion-dollar market cap company, with a single share of stock (Class A shares) trading at more than $443,841 as of Nov. 1, 2022.

Widely known as the Oracle of Omaha, Buffett is a buy-and-hold investor who built his fortune by acquiring undervalued companies. More recently, Berkshire Hathaway has invested in large, well-known companies. Its portfolio of wholly owned subsidiaries includes interests in insurance, energy distribution, and railroads as well as consumer products.

Buffett is a notable Bitcoin skeptic.

Buffett has dedicated much of his wealth to philanthropy. Between 2006 and 2020, he gave away $41 billion—mostly to the Bill & Melinda Gates Foundation and his children’s charities. Buffett launched the Giving Pledge alongside Bill Gates in 2010.

Now 92 years old, Buffett still serves as CEO, but in 2021 he hinted that his successor might be Gregory Abel, head of Berkshire’s non-insurance operations.

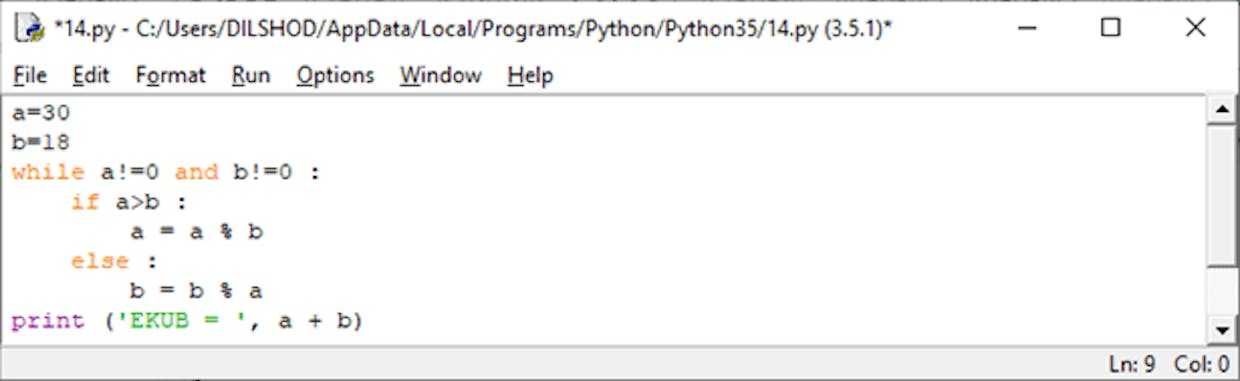

Evklid algoritmi. Ikkita butun sonlarning eng katta umumiy bo’luvchisini topish.

Ikkita butun sonlarning eng katta umumiy bo’luvchisini topish algoritmlari:

1-algoritm.

- Berilgan sonlarning kattasini kichigiga bo’lamiz.

- Agar bo’lganda qoldiq 0 bo’linsa, u holda kichik son EKUB hisoblanadi.

- Agar qoldiq chiqsa, unda katta sonni kichik son bilan, kichik sonni qoldiq bilan almashtiramiz.

- 1 punktga qaytamiz.

Misol uchun:30 va 18 sonlarning EKUB ni hisoblasak.30 / 18 = 1 (qoldiq 12)18 / 12 = 1 (qoldiq 6)12 / 6 = 2 (qoldiq 0)Yakunlandi: EKUB (30, 18) = 6 ga teng.

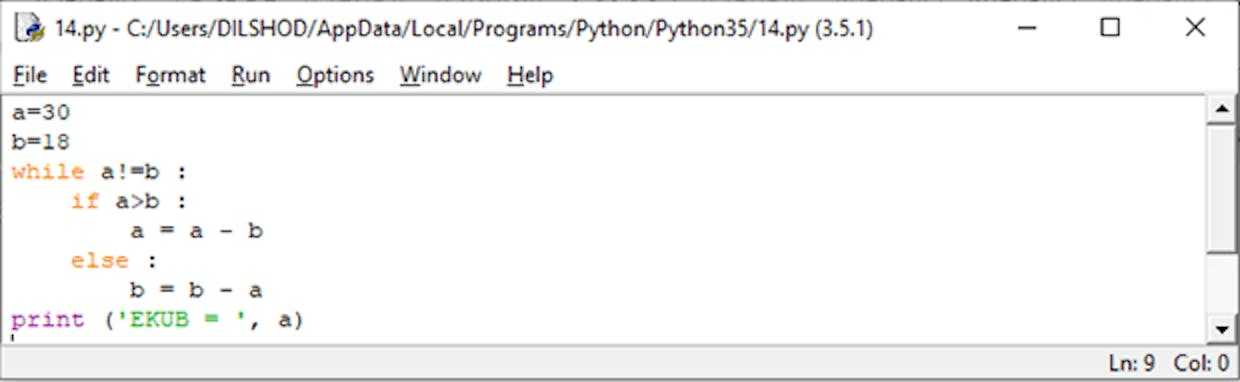

2-algoritm.

- Berilgan sonlarning kattasidan kichigini ayiramiz.

- Agar ayirganda qoldiq 0 bo’linsa, u holda berilgan sonlar bir-biriga teng va shu sonlarning o’zi EKUB hisoblanadi.

- Agar qoldiq chiqsa, unda katta sonni kichik son bilan, kichik sonni qoldiq bilan almashtiramiz.

- 1 punktga qaytamiz.

Misol uchun:30 va 18 sonlarning EKUB ni hisoblasak.30 — 18 = 1218 — 12 = 612 — 6 = 6

6 – 6 = 0Yakunlandi: EKUB (30, 18) = 6 ga teng.

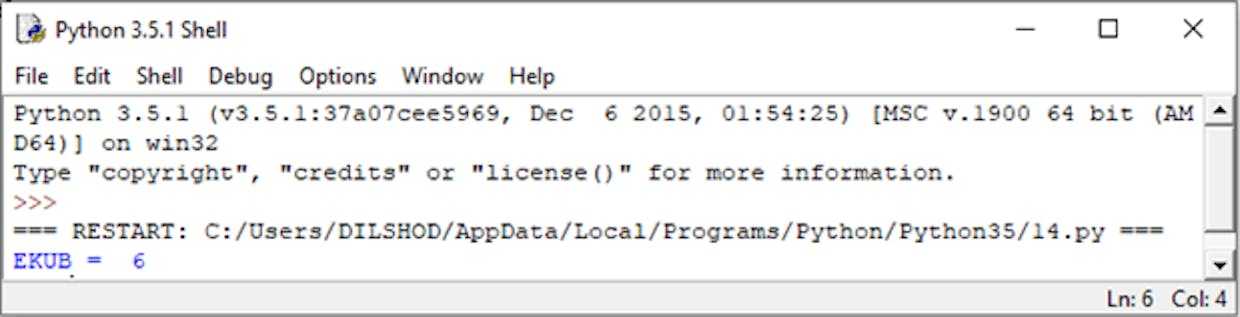

Natija:

What is the BART HuggingFace Transformer Model in NLP?

HuggingFace Transformer models provide an easy-to-use implementation of some of the best performing models in natural language processing. Transformer models are the current state-of-the-art (SOTA) in several NLP tasks such as text classification, text generation, text summarization, and question answering.

The original Transformer is based on an encoder-decoder architecture and is a classic sequence-to-sequence model. The model’s input and output are in the form of a sequence (text), and the encoder learns a high-dimensional representation of the input,which is then mapped to the output by the decoder. This architecture introduced a new form of learning for language-related tasks and, thus, the models spawned from it achieve outstanding results overtaking the existing deep neural network-based methods.

Since the inception of the vanilla Transformer, several recent models inspired by the Transformer used the architecture to improve the benchmark of NLP tasks. Transformer models are first pre-trained on a large text corpus (such as BookCorpus or Wikipedia). This pretraining makes sure that the model “understands language” and has a decent starting point to learn how to perform further tasks. Hence, after this step, we only have a language model. The ability of the model to understand language is highly significant since it will determine how well you can further train the model for something like text classification or text summarization.

BART is one such Transformer model that takes components from other Transformer models and improves the pretraining learning. BART or Bidirectional and Auto-Regressive

Transformers was proposed in the BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension paper. The BART HugggingFace model allows the pre-trained weights and weights fine-tuned on question-answering, text summarization, conditional text generation, mask filling, and sequence classification.

So without much ado, let’s explore the BART model – the uses, architecture, working, as well as a HuggingFace example.

New Projects

Build a Text Generator Model using Amazon SageMaker

View Project

CycleGAN Implementation for Image-To-Image Translation

View Project

Hands-On Approach to Regression Discontinuity Design Python

View Project

Building Data Pipelines in Azure with Azure Synapse Analytics

View Project

End-to-End ML Model Monitoring using Airflow and Docker

View Project

Learn to Build a Siamese Neural Network for Image Similarity

View Project

Build Streaming Data Pipeline using Azure Stream Analytics

View Project

PyTorch Project to Build a GAN Model on MNIST Dataset

View Project

Build an Image Segmentation Model using Amazon SageMaker

View Project

Talend Real-Time Project for ETL Process Automation

View Project

B0 to B7 variants of EfficientNet

(This section provides some details on «compound scaling», and can be skipped

if you’re only interested in using the models)

Based on the original paper people may have the

impression that EfficientNet is a continuous family of models created by arbitrarily

choosing scaling factor in as Eq.(3) of the paper. However, choice of resolution,

depth and width are also restricted by many factors:

Resolution: Resolutions not divisible by 8, 16, etc. cause zero-padding near boundaries

of some layers which wastes computational resources. This especially applies to smaller

variants of the model, hence the input resolution for B0 and B1 are chosen as 224 and

240.

Depth and width: The building blocks of EfficientNet demands channel size to be

multiples of 8.

Resource limit: Memory limitation may bottleneck resolution when depth

and width can still increase. In such a situation, increasing depth and/or

width but keep resolution can still improve performance.

As a result, the depth, width and resolution of each variant of the EfficientNet models

are hand-picked and proven to produce good results, though they may be significantly

off from the compound scaling formula.

Therefore, the keras implementation (detailed below) only provide these 8 models, B0 to B7,

instead of allowing arbitray choice of width / depth / resolution parameters.

Удалить программу FromDocToPDF Toolbar и связанные с ней через Панель управления

Мы рекомендуем вам изучить список установленных программ и найти FromDocToPDF Toolbar а также любые другие подозрительные и незнакомы программы. Ниже приведены инструкции для различных версий Windows. В некоторых случаях FromDocToPDF Toolbar защищается с помощью вредоносного процесса или сервиса и не позволяет вам деинсталлировать себя. Если FromDocToPDF Toolbar не удаляется или выдает ошибку что у вас недостаточно прав для удаления, произведите нижеперечисленные действия в Безопасном режиме или Безопасном режиме с загрузкой сетевых драйверов или используйте утилиту для удаления FromDocToPDF Toolbar.

Windows 10

- Кликните по меню Пуск и выберите Параметры.

- Кликните на пункт Система и выберите Приложения и возможности в списке слева.

- Найдите FromDocToPDF Toolbar в списке и нажмите на кнопку Удалить рядом.

- Подтвердите нажатием кнопки Удалить в открывающемся окне, если необходимо.

Windows 8/8.1

- Кликните правой кнопкой мыши в левом нижнем углу экрана (в режиме рабочего стола).

- В открывшимся меню выберите Панель управления.

- Нажмите на ссылку Удалить программу в разделе Программы и компоненты.

- Найдите в списке FromDocToPDF Toolbar и другие подозрительные программы.

- Кликните кнопку Удалить.

- Дождитесь завершения процесса деинсталляции.

Windows 7/Vista

- Кликните Пуск и выберите Панель управления.

- Выберите Программы и компоненты и Удалить программу.

- В списке установленных программ найдите FromDocToPDF Toolbar.

- Кликните на кнопку Удалить.

Windows XP

- Кликните Пуск.

- В меню выберите Панель управления.

- Выберите Установка/Удаление программ.

- Найдите FromDocToPDF Toolbar и связанные программы.

- Кликните на кнопку Удалить.

Какие ещё стандарты должен поддерживать хороший сервис VPN

Главное достоинство любого VPN — поддержка открытого протокола OpenVPN, реализующего безопасный доступ к удаленным ресурсам на аппаратном уровне.

Данный протокол проходит регулярный аудит, подвергается тестированиям и всеобщим исследованиям. Усиленная работа массы фанатов позволяет своевременно находить проблемы и быстро устранять найденные дыры.

Собственные DNS-серверы позволяют пользователю не «светить» физические данные своего провайдера на ресурсе, к которому осуществляется доступ.

Поддержка PGP позволяет упростить общение пользователя с VPN-сервисом, предлагая все необходимые операции в виде библиотеки или компьютерной программы, через которую доступно для рядового юзера происходят операции обезличивания, шифрования.

Astraphobia

Astraphobia is a fear of thunder and lightning. People with this phobia experience overwhelming feelings of fear when they encounter such weather-related phenomena. Symptoms of astraphobia are often similar to those of other phobias and include shaking, rapid heart rate, and increased respiration.

During a thunder or lightning storm, people with this disorder may go to great lengths to take shelter or hide from the weather event such as hiding in bed under the covers or even ducking inside a closet or bathroom. People with this phobia also tend to develop an excessive preoccupation with the weather.

7. Larry Ellison

- Age: 78

- Residence: Hawaii

- Co-founder, Chair, and CTO: Oracle (ORCL)

- Net Worth: $93.7 billion

- Oracle Ownership Stake: 40%+ ($65.6 billion)

- Other Assets: Tesla equity ($10.2 billion public asset), $17.2 billion in cash and real estate

Larry Ellison was born in New York City to a 19-year-old single mother. After dropping out of the University of Chicago in 1966, Ellison moved to California and worked as a computer programmer. In 1973, he joined the electronics company Ampex, where he met future partners Ed Oates and Bob Miner. Three years later, Ellison moved to Precision Instruments, serving as the company’s vice president of research and development.

In 1977, Ellison founded Software Development Laboratories alongside Oates and Miner. Two years later, the company released Oracle, the first commercial relational database program to use Structured Query Language. The database program proved so popular that SDL would change its name to Oracle Systems Corporation in 1982. Ellison gave up the CEO role at Oracle in 2014 after 37 years. He joined Tesla’s board in December 2018 and stepped down in June 2022.

Oracle is the world’s second-largest software company, providing a wide variety of cloud computing programs as well as Java and Linux code and the Oracle Exadata computing platform.

Oracle has acquired numerous large companies, including human resources management systems provider PeopleSoft in 2005, customer relationship management applications provider Siebel in 2006, enterprise infrastructure software provider BEA Systems in 2008, and hardware-and-software developer Sun Microsystems in 2009. In December 2021, Oracle agreed to buy medical records software provider Cerner (CERN) for $28.3 billion in cash.

Long known for extravagant spending, Ellison has invested heavily in luxury real estate over the last decade. Perhaps his single most impressive acquisition was the $300 million purchase of nearly the entire Hawaiian island of Lanai in 2012, where the billionaire has lived since 2020. Ellison has built a hydroponics farm and a luxury spa on the island.

Ellison has focused his philanthropy on medical research. In 2016, he gave $200 million to the University of Southern California for a new cancer research center. Ellison backed the Oracle Team USA sailing team, which won the America’s Cup racing series in 2010 and 2013.

Historic:

2017 Release Candidate Translation Teams:

-

Azerbaijanian: Rashad Aliyev ()

- Chinese RC2:Rip、包悦忠、李旭勤、王颉、王厚奎、吴楠、徐瑞祝、夏天泽、张家银、张剑钟、赵学文(排名不分先后,按姓氏拼音排列) OWASP Top10 2017 RC2 — Chinese PDF

- French: Ludovic Petit: , Sébastien Gioria:

- Others to be listed.

2013 Completed Translations:

- Arabic: OWASP Top 10 2013 — Arabic PDFTranslated by: Mohannad Shahat: , Fahad: @SecurityArk, Abdulellah Alsaheel: , Khalifa Alshamsi: and Sabri(KING SABRI): , Mohammed Aldossary:

- Chinese 2013:中文版2013 OWASP Top 10 2013 — Chinese (PDF).项目组长: Rip、王颉, 参与人员: 陈亮、 顾庆林、 胡晓斌、 李建蒙、 王文君、 杨天识、 张在峰

- Czech 2013: OWASP Top 10 2013 — Czech (PDF) OWASP Top 10 2013 — Czech (PPTX)CSIRT.CZ — CZ.NIC, z.s.p.o. (.cz domain registry): Petr Zavodsky: , Vaclav Klimes, Zuzana Duracinska, Michal Prokop, Edvard Rejthar, Pavel Basta

- French 2013: OWASP Top 10 2013 — French PDFLudovic Petit: , Sébastien Gioria: , Erwan Abgrall: , Benjamin Avet: , Jocelyn Aubert: , Damien Azambour: , Aline Barthelemy: , Moulay Abdsamad Belghiti: , Gregory Blanc: , Clément Capel: , Etienne Capgras: , Julien Cayssol: , Antonio Fontes: , Ely de Travieso: , Nicolas Grégoire: , Valérie Lasserre: , Antoine Laureau: , Guillaume Lopes: , Gilles Morain: , Christophe Pekar: , Olivier Perret: , Michel Prunet: , Olivier Revollat: , Aymeric Tabourin:

- German 2013: OWASP Top 10 2013 — German PDF which is Frank Dölitzscher, Torsten Gigler, Tobias Glemser, Dr. Ingo Hanke, Thomas Herzog, Kai Jendrian, Ralf Reinhardt, Michael Schäfer

- Hebrew 2013: OWASP Top 10 2013 — Hebrew PDFTranslated by: Or Katz, Eyal Estrin, Oran Yitzhak, Dan Peled, Shay Sivan.

- Italian 2013: OWASP Top 10 2013 — Italian PDFTranslated by: Michele Saporito: , Paolo Perego: , Matteo Meucci: , Sara Gallo: , Alessandro Guido: , Mirko Guido Spezie: , Giuseppe Di Cesare: , Paco Schiaffella: , Gianluca Grasso: , Alessio D’Ospina: , Loredana Mancini: , Alessio Petracca: , Giuseppe Trotta: , Simone Onofri: , Francesco Cossu: , Marco Lancini: , Stefano Zanero: , Giovanni Schmid: , Igor Falcomata’:

- Japanese 2013: OWASP Top 10 2013 — Japanese PDFTranslated by: Chia-Lung Hsieh: ryusuke.tw(at)gmail.com, Reviewed by: Hiroshi Tokumaru, Takanori Nakanowatari

- Korean 2013: OWASP Top 10 2013 — Korean PDF (이름가나다순)김병효:, 김지원:, 김효근:, 박정훈:, 성영모:, 성윤기:, 송보영:, 송창기:, 유정호:, 장상민:, 전영재:, 정가람:, 정홍순:, 조민재:,허성무:

- Brazilian Portuguese 2013: OWASP Top 10 2013 — Brazilian Portuguese PDFTranslated by: Carlos Serrão, Marcio Machry, Ícaro Evangelista de Torres, Carlo Marcelo Revoredo da Silva, Luiz Vieira, Suely Ramalho de Mello, Jorge Olímpia, Daniel Quintão, Mauro Risonho de Paula Assumpção, Marcelo Lopes, Caio Dias, Rodrigo Gularte

- Spanish 2013: OWASP Top 10 2013 — Spanish PDFGerardo Canedo: , Jorge Correa: , Fabien Spychiger: , Alberto Hill: , Johnatan Stanley: , Maximiliano Alonzo: , Mateo Martinez: , David Montero: , Rodrigo Martinez: , Guillermo Skrilec: , Felipe Zipitria: , Fabien Spychiger: , Rafael Gil: , Christian Lopez: , jonathan fernandez , Paola Rodriguez: , Hector Aguirre: , Roger Carhuatocto: , Juan Carlos Calderon: , Marc Rivero López: , Carlos Allendes: , : , Manuel Ramírez: , Marco Miranda: , Mauricio D. Papaleo Mayada: , Felipe Sanchez: , Juan Manuel Bahamonde: , Adrià Massanet: , Jorge Correa: , Ramiro Pulgar: , German Alonso Suárez Guerrero: , Jose A. Guasch: , Edgar Salazar:

- Ukrainian 2013: OWASP Top 10 2013 — Ukrainian PDFKateryna Ovechenko, Yuriy Fedko, Gleb Paharenko, Yevgeniya Maskayeva, Sergiy Shabashkevich, Bohdan Serednytsky

About the dataset:

We will be using the built-in Boston dataset which can be loaded through sklearn. We will be selecting features using the above listed methods for the regression problem of predicting the “MEDV” column. In the following code snippet, we will import all the required libraries and load the dataset.

#importing librariesfrom sklearn.datasets import load_bostonimport pandas as pdimport numpy as npimport matplotlibimport matplotlib.pyplot as pltimport seaborn as snsimport statsmodels.api as sm%matplotlib inlinefrom sklearn.model_selection import train_test_splitfrom sklearn.linear_model import LinearRegressionfrom sklearn.feature_selection import RFEfrom sklearn.linear_model import RidgeCV, LassoCV, Ridge, Lasso#Loading the datasetx = load_boston()df = pd.DataFrame(x.data, columns = x.feature_names)df = x.targetX = df.drop("MEDV",1) #Feature Matrixy = df #Target Variabledf.head()

1. Filter Method:

As the name suggest, in this method, you filter and take only the subset of the relevant features. The model is built after selecting the features. The filtering here is done using correlation matrix and it is most commonly done using Pearson correlation.

Here we will first plot the Pearson correlation heatmap and see the correlation of independent variables with the output variable MEDV. We will only select features which has correlation of above 0.5 (taking absolute value) with the output variable.

The correlation coefficient has values between -1 to 1 — A value closer to 0 implies weaker correlation (exact 0 implying no correlation) — A value closer to 1 implies stronger positive correlation — A value closer to -1 implies stronger negative correlation

#Using Pearson Correlationplt.figure(figsize=(12,10))cor = df.corr()sns.heatmap(cor, annot=True, cmap=plt.cm.Reds)plt.show()

#Correlation with output variablecor_target = abs(cor)#Selecting highly correlated featuresrelevant_features = cor_targetrelevant_features

As we can see, only the features RM, PTRATIO and LSTAT are highly correlated with the output variable MEDV. Hence we will drop all other features apart from these. However this is not the end of the process. One of the assumptions of linear regression is that the independent variables need to be uncorrelated with each other. If these variables are correlated with each other, then we need to keep only one of them and drop the rest. So let us check the correlation of selected features with each other. This can be done either by visually checking it from the above correlation matrix or from the code snippet below.

print(df].corr())print(df].corr())

From the above code, it is seen that the variables RM and LSTAT are highly correlated with each other (-0.613808). Hence we would keep only one variable and drop the other. We will keep LSTAT since its correlation with MEDV is higher than that of RM.

After dropping RM, we are left with two feature, LSTAT and PTRATIO. These are the final features given by Pearson correlation.

2. Wrapper Method:

A wrapper method needs one machine learning algorithm and uses its performance as evaluation criteria. This means, you feed the features to the selected Machine Learning algorithm and based on the model performance you add/remove the features. This is an iterative and computationally expensive process but it is more accurate than the filter method.

There are different wrapper methods such as Backward Elimination, Forward Selection, Bidirectional Elimination and RFE. We will discuss Backward Elimination and RFE here.

What Is CWE?

There are plenty of acronyms across every industry, but CWE is a big one for software security. Static analysis and security tools must be backed by a knowledge of what to look for and how to approach the ideal level of risk appropriate for your project.

CWE seeks to make vulnerability management more streamlined and accessible. The community-developed catalog features hardware and software weaknesses and is described as “a common language, a measuring stick for security tools, and a baseline for weakness identification, mitigation, and prevention efforts.”

Who Owns CWE?

As mentioned, the Mitre Corporation owns and maintains the CWE. They also manage FFRDCs or federally funded research and development centers for the U.S. Department of Homeland Security, as well as agencies in healthcare, aviation, defense, and, of course, cybersecurity.

The CWE list compiles common vulnerabilities and exposures that can help programmers and software developers maintain information security. After all, adhering to security policies through the development lifecycle is much easier to manage than post-breach strategies.

How Many CWEs Are There?

There is only one CWE as managed by the Mitre Corporation. However, that list contains more than 600 categories. Its latest version (3.2) released in January of 2019.

The Top 7 Happiest Countries in the World (plus an inspiring honorable mention) for 2021:

Finland

Finland ranks as the world’s happiest country based on the 2021 report, with a score of 7.842 out of a total possible score of 10. The report writers credited the citizens of Finland’s strong feelings of communal support and mutual trust with not only helping secure the #1 ranking, but (more importantly) helping the country as a whole navigate the COVID-19 pandemic. Additionally, Finlanders felt strongly that they were free to make their own choices, and showed minimal suspicion of government corruption. Both of these factors are strong contributors to overall happiness.

Denmark

The second-happiest country in the world is Denmark, which scores 7.620. Denmark’s values for each of the six variables are quite comparable to those of Finland. In fact, Denmark even outscored the leader in multiple categories, including GDP per capita, generosity, and perceived lack of corruption, demonstrating that it may claim the top spot sometime in the near future.

Switzerland

As the third-happiest country in the world, Switzerland scored a total of 7.571 out of 10. In general, the Swiss are very healthy, with one of the world’s lowest obesity rates and a long life expectancy. The Swiss also have a very high median salary, about 75% higher than that of the United States, and the highest GDP per capita in the top seven. Additionally, there is a strong sense of community in Switzerland and a firm belief that it is a safe and clean country—which is statistically true. Along with Iceland and Denmark, Switzerland is one of the world’s safest countries.

Iceland

Iceland ranks as 2021’s fourth-happiest country in the entire world, with a total score of 7.554. Of the top seven happiest countries around the globe, Iceland has the highest feeling of social support (higher even than Finland, Norway, and Denmark, which all tied for second place). Iceland also had the second-highest generosity score in the top seven, though it’s worth noting that it ranked only 11th worldwide.

Netherlands

Edging out Norway for the honor of fifth-happiest country in the world is the Netherlands (also known as Holland to many tulip lovers), with a score of 7.464. The Netherlands scored higher in the generosity category than any other top-seven country and also displayed an impressive lack of perceived corruption.

Norway

The citizens of sixth-place Norway (7.392) feel they are being well cared for by their government thanks to universal healthcare and free college tuition. Norwegians also enjoy a healthy work-life balance, working an average of 38 hours per week vs. 41.5. hours per week in the United States. Additionally, Norway has a low crime rate and a strong sense of community among its citizens—a quality it shares with many of the top seven.

Sweden

Seventh-place Sweden (7.363) ranks high, if not quite highest, in virtually every category measured. For example: Sweden has a higher lack of corruption score than all but four countries worldwide (two of which are Finland and Denmark), the fourteenth-highest GDP per capita of all 149 countries measured, and the fourth-highest life expectancy in the top seven.